On the evening of the polling day, some parts of London and southern England were hit by very bad weather, while other areas experienced nice weather with high temperatures and sunshine. The weather could have affected the Brexit vote by affecting Turnout or by affecting Voting Intentions.

To cut a long story short: there is, using very crude data, no evidence suggesting that the rainfall affected turnout. There is weak evidence, suggesting that the good weather in parts of the country, may have induced some voters to vote leave in the “heat of the moment”. But: overall, its unlikely that this would have changed the results.

The Brexit voters had an overall decisive lead with more than a million votes. Nevertheless, it would be interesting to see whether the “Great British Summer” contributed to the result. Further, there is quite a bit of serious economics research that document relationships, for example between rainfall and conflict, or between temperatures and aggressive behavior. But how could the weather have affected the Brexit vote?

Polling on a working day Coming from Germany, it struck me as extremely odd, why polls would take place on Thursdays – a working day. I feel that this is one of those odd English institutions or traditions created by the elites to – de-facto – limit the franchise of the working class. Naturally, it is much more difficult for the (commuting) employed  to cast their vote, as they have to do it, a) either in advance through a postal vote, b) in the morning or evening hours, which coincide with the commuting hours or, c) during the day or their lunch break. However, commuting makes it difficult as voters can register only at one polling station to cast their vote.

The opportunity cost of voting is unevenly distributed. The retired or unemployed, who turned out to be, on average more likely to vote Brexit had lower opportunity cost of voting. The uneven distribution of the opportunity cost of voting due to polling on a working day could have affected the electoral outcome, as voters who are young (and employed) were more likely to vote remain.

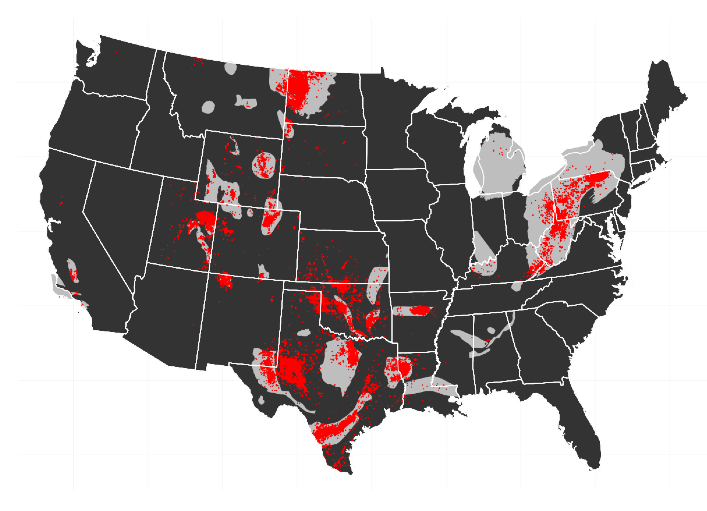

Come the weather. London, and some parts of the south saw in less than 24 hours, the amount of rainfall that usually falls within a whole month (see BBC article below). The reason, why I thought that the weather could have affected the Brexit vote is simple. Several parts of the South of England, the commuting belt around London, was affected by severe weather on the 23rd – both in the morning and the evening. Having lived and commuted from Guildford for a year, I know how painful the commute is. You may leave work, get drenched and wet along the way and then, get stuck in Waterloo or Victoria station, where there are few trains running. By the time you get home, you really may not have the energy to get up and vote. Especially since during the day, the markets were trending up, potentially suggesting that Bremain would be a done deal.  The current #Bregret wave on Twitter also suggests, that some voters simply didnt take things serious and may have decided not to vote, as the sun was out in some parts of England.Â

London and the South, one of the few islands of strong Bremain support experienced a months rainfall within 24 hours. Its not unreasonable to assume that the bad weather could, for all these mixtures of reasons, conributed to the voting outcome

So what do the data say? The results of the referendum, broken up by Local Authority Districts is published on the electoral commission website.

This data can easily be downloaded and loaded into R (dropbox link with all data and code). What turned out to be much more difficult to get is very recent weather data – and maybe that is something, where this analysis still has lots of room for improvement. The only data that I could find that is recent enough is from the following MET Office data sharing service.

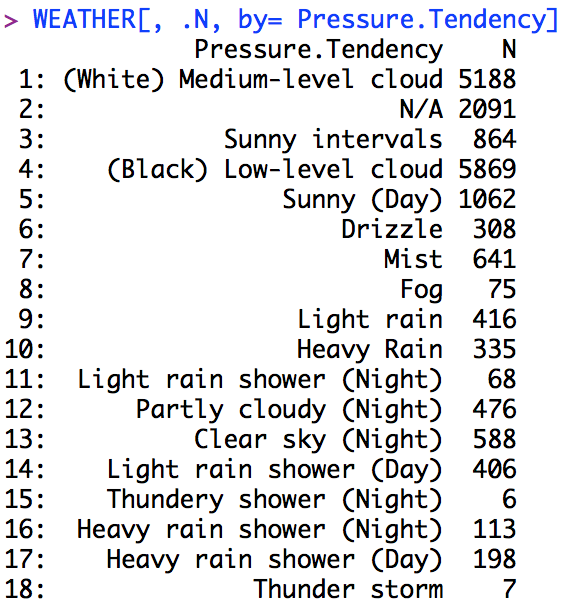

The data sharing service provides hourly observation data for 135 weather stations in the UK. Unfortunately, it does not provide the amount of rainfall in mm, but rather, just a categorization of the weather condition into classes such as

In addition, the data provides the temperature, wind speed and pressure. I construct a variable that measures the number of hours in which the weather was classified as “Heavy Rain” or  involved “Thunder”. Similarly, I construct daily averages of temperature.

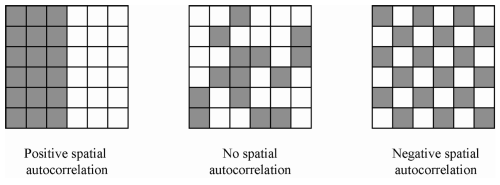

Matching Local Authority Districts to weather points. As indicated, the weather data leaves a lot of room for improvement due to its coarseness and if you have any further data suggestions, let me know. I assign a weather observation, for which I have the latitude and longitude to the centroid of the nearest Local Authority District. I obtained a shapefile of Local Authority Districts covering just England.

Did the weather affect the referendum? There are at least two margins. First, the weather could affect turn out, as suggested above. Second, the weather could affect the mood. Lastly, the weather could have no effect overall.

Did the weather affect turnout? Probably not. The first set of regressions just explores the number of hours it rained during the day, the number of hours it rained in the morning and afternoon/ evening, the last puts both together. Further, I include the average temperature as regressor. Also, I include for Region fixed effect and the regressions are estimated just off English data, for which I could match the data to a shapefile. Throughout, no statistical association between rainfall and turnout appears in the data. This being said, the rainfall proxy is very crude, while temperature is more precisely measured. And here is at least a weak association suggesting that a 1 degree increase in mean temperature, reduced turnout by 0.35 percentage points. But the coefficient is too imprecise for any meaningful association.

summary(felm(Pct_Turnout ~ rainingcommuting23 + rainingnoncommuting23+ temp23 | Region | 0 | id, data=MERGED))

## ## Call: ## felm(formula = Pct_Turnout ~ rainingcommuting23 + rainingnoncommuting23 + temp23 | Region | 0 | id, data = MERGED) ## ## Residuals: ## Min 1Q Median 3Q Max ## -0.142857 -0.026002 0.003213 0.027800 0.127797 ## ## Coefficients: ## Estimate Cluster s.e. t value Pr(>|t|) ## rainingcommuting23 -0.001257 0.002663 -0.472 0.637 ## rainingnoncommuting23 0.001951 0.002007 0.972 0.332 ## temp23 -0.003253 0.002660 -1.223 0.222 ## ## Residual standard error: 0.04087 on 308 degrees of freedom ## (62 observations deleted due to missingness) ## Multiple R-squared(full model): 0.3352 Adjusted R-squared: 0.3114 ## Multiple R-squared(proj model): 0.008567 Adjusted R-squared: -0.02684 ## F-statistic(full model, *iid*):14.12 on 11 and 308 DF, p-value: < 2.2e-16 ## F-statistic(proj model): 0.7966 on 3 and 308 DF, p-value: 0.4965

We can perform a type of placebo, by checking whether weather conditions on the day before the referendum had an effect. The only coefficient that gets anywhere close is rainfall during commuting hours on the day before. But again, there is a lack of precision. In any case, ideally finer data at the polling station level in addition to finer weather data is needed.

summary(felm(Pct_Turnout ~ rainingcommuting22 + rainingnoncommuting22+ temp22 | Region | 0 | id, data=MERGED))

## ## Call: ## felm(formula = Pct_Turnout ~ rainingcommuting22 + rainingnoncommuting22 + temp22 | Region | 0 | id, data = MERGED) ## ## Residuals: ## Min 1Q Median 3Q Max ## -0.154669 -0.026714 0.003494 0.028441 0.115703 ## ## Coefficients: ## Estimate Cluster s.e. t value Pr(>|t|) ## rainingcommuting22 4.440e-03 3.680e-03 1.207 0.229 ## rainingnoncommuting22 -1.467e-05 2.594e-03 -0.006 0.995 ## temp22 4.816e-04 9.895e-04 0.487 0.627 ## ## Residual standard error: 0.04091 on 308 degrees of freedom ## (62 observations deleted due to missingness) ## Multiple R-squared(full model): 0.3338 Adjusted R-squared: 0.31 ## Multiple R-squared(proj model): 0.006468 Adjusted R-squared: -0.02902 ## F-statistic(full model, *iid*):14.03 on 11 and 308 DF, p-value: < 2.2e-16 ## F-statistic(proj model): 0.7678 on 3 and 308 DF, p-value: 0.5128

Did the weather affect voting behavior?

There is a bit more here, the below regression suggest that higher temperatures were correlated with a higher percentage for the vote leave campaign. The turnout regressions suggested a potentially weakly lower turn out, as temperatures are higher. So did high temperatures induce Bremain voters to vote for Brexit?

The point estimate on temperature “temp23” significant at the 5% level, suggesting that a 1 degree increase in the temperature increased Brexit votes by 1 percentage point. This is a lot, but could just be a false positive – i.e. a statistical error. Nevertheless, maybe thats why there is so much to #Bregret? Did the heat of the moment induce voters to make a decision that they now regret?

summary(felm(Pct_Leave ~ rainingcommuting23 + rainingnoncommuting23+ temp23 | Region | 0 | id, data=MERGED))

## ## Call: ## felm(formula = Pct_Leave ~ rainingcommuting23 + rainingnoncommuting23 + temp23 | Region | 0 | id, data = MERGED) ## ## Residuals: ## Min 1Q Median 3Q Max ## -30.9663 -5.2630 0.1153 5.8665 27.1071 ## ## Coefficients: ## Estimate Cluster s.e. t value Pr(>|t|) ## rainingcommuting23 -0.5980 0.4794 -1.248 0.2132 ## rainingnoncommuting23 -0.3900 0.3903 -0.999 0.3185 ## temp23 1.1350 0.4935 2.300 0.0221 * ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 8.104 on 308 degrees of freedom ## (62 observations deleted due to missingness) ## Multiple R-squared(full model): 0.3729 Adjusted R-squared: 0.3505 ## Multiple R-squared(proj model): 0.0276 Adjusted R-squared: -0.007127 ## F-statistic(full model, *iid*):16.65 on 11 and 308 DF, p-value: < 2.2e-16 ## F-statistic(proj model): 2.47 on 3 and 308 DF, p-value: 0.06201

In order to gain a bit more confidence, we can check whether the weather conditions on the day before. Throughout, there is no indication that this was the case (see below).

summary(felm(Pct_Leave ~ rainingcommuting22 + rainingnoncommuting22+ temp22 | Region | 0 | id, data=MERGED))

## ## Call: ## felm(formula = Pct_Leave ~ rainingcommuting22 + rainingnoncommuting22 + temp22 | Region | 0 | id, data = MERGED) ## ## Residuals: ## Min 1Q Median 3Q Max ## -31.3071 -5.0842 0.5469 5.2357 30.5874 ## ## Coefficients: ## Estimate Cluster s.e. t value Pr(>|t|) ## rainingcommuting22 0.4547 0.7448 0.610 0.542 ## rainingnoncommuting22 -0.2837 0.5679 -0.500 0.618 ## temp22 0.1565 0.3447 0.454 0.650 ## ## Residual standard error: 8.208 on 308 degrees of freedom ## (62 observations deleted due to missingness) ## Multiple R-squared(full model): 0.3567 Adjusted R-squared: 0.3338 ## Multiple R-squared(proj model): 0.00249 Adjusted R-squared: -0.03314 ## F-statistic(full model, *iid*):15.53 on 11 and 308 DF, p-value: < 2.2e-16 ## F-statistic(proj model): 0.211 on 3 and 308 DF, p-value: 0.8888

What can we take from this?I was concinced that I could document an effect of rainfall during the commuting hours. Having experienced the Waterloo commute for a year, I could sympathize with the idea of not going to vote after a long day and a terrible commute. The retired can go vote any time during the day, why the commuters/ employed need to arrange this for the evening or mornign hours. Is this fair or de-facto disenfranchising a set of voters? With the crude data on rainfall, I do not find any evidence in support of the rainfall channel. This would have been policy relevant.

The temperature regressions indicate some weak evidence on voting behavior, suggesting higher Brexit vote share, but no statistically discernible effect on turn out.

There are a lot of buts and ifs here. The analysis is super coarse, but potentially with finer polling data, an actual analysis of commuting flows and better weather data, some points could be driven home.

Maybe that is just my attempt to cope with the Brexit shock. As a German living and working in the UK, I am quite shocked and devastated and worried about what the future holds for me, my partner and my friends here.

Thiemo Fetzer, Assistant Professor in Economics, University of Warwick.

Dropbox Link with all data and code